This project focuses on AI and computer vision, a project that I have very little practical experience but I find greatly interesting, I especially have been interested in the recent controversy surrounding AI art. That these AI generators are producing work that is plagiarised as it is stealing the labour from the artists in its database. While there are definite concerns where plagiarism and forgery is concerned, I do not think that it is the be all and end all. I feel like these arguments essentially ignore the intricacies and the real art that is produced using AI as a tool. It isn’t as cut and dry as “AI bad”.

https://www.bbc.co.uk/news/technology-62788725

AI art

Mario Klingemann

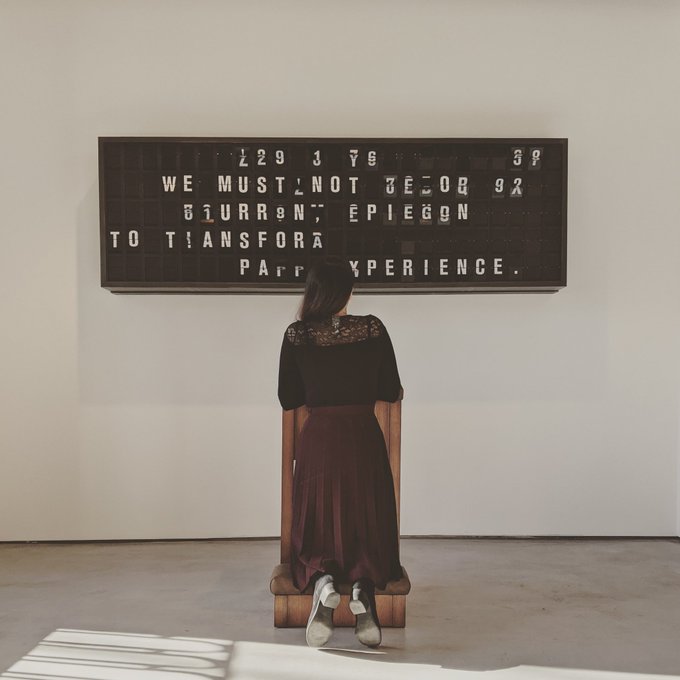

I have been looking into artists shown in the launch and came across this work by Mario Klingemann.

Appropriate Response

I like the idea of this, a gesture then an action. I also like the idea of the more analogue display, the look reminds me of the lights from Stranger Things S1.

I also found this work by Mario very interesting, its a “mirror” that shows an amalgamation of faces, all those that have looked into the mirror are combined into one.

I love the staging of the project, it is a very passive interaction that the user may just happen across it, there isnt many ways in which it can be misused or wrongly interpreted. It reminds me of my own work from control last year, perhaps there could be a machine learning AI spin to the setup of the project.

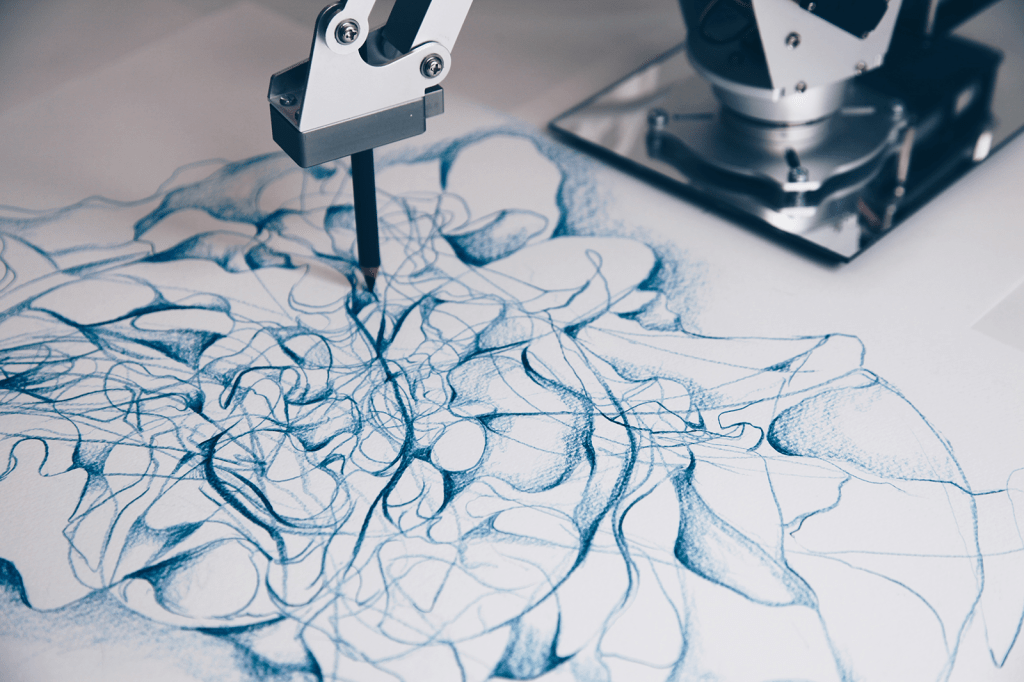

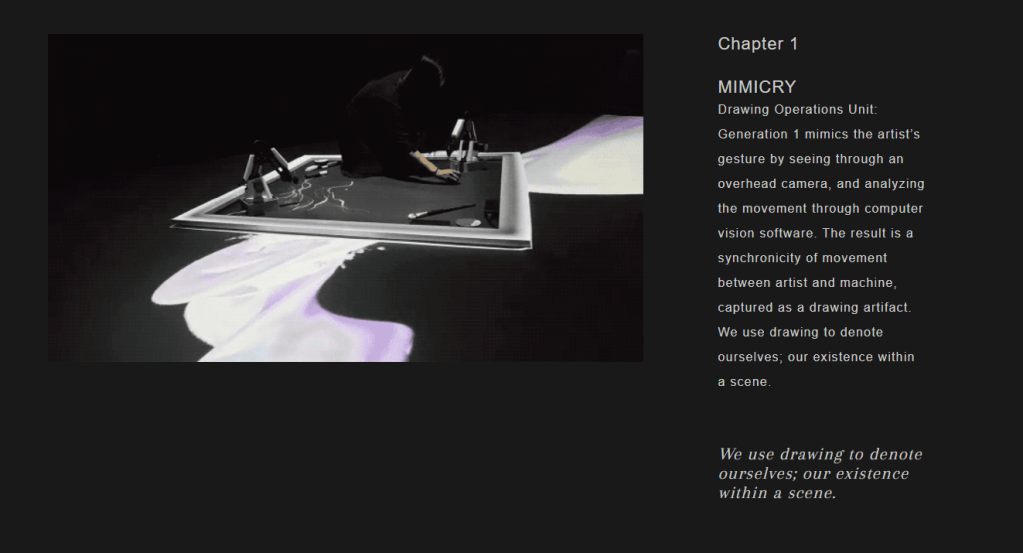

Sougwen Chung

I have looked at Chungs work before last year for the control project I took interest in her human machine collaboration projects

The machine learns from the artists drawing style, with gestures being saved in a memory bank. This in hand with generation 1 accumulated to a performance art piece based on Mimicry, Memory and Future Speculations.

It is a 25 minute long fully improvised performance, again the concept and staging for this project in all its forms I find very interesting, the fact that the central theme is carried throughout even to the final performance, both the machine and the artist are on the ground sharing the same space, there’s no hierarchy etc.

David Bowing

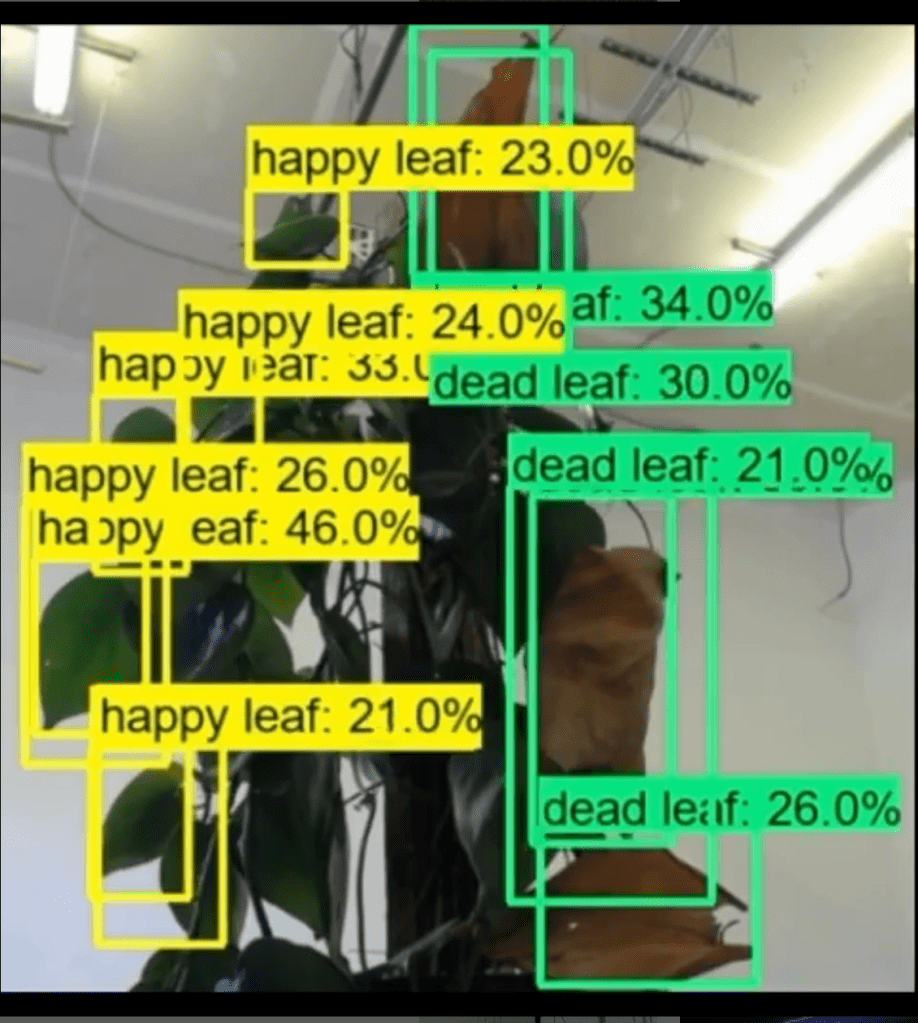

I found Bowing’s work via twitter, not mostly focusing on AI but it is an element of his work. In terms of AI he has work with plants.

Its not just his AI work that I find interesting though its the other work he has produced. I posted one on the IxD facebook group. That being Plant Machete

I like that most of his work uses plants as a main source or material for the work. Usually contrasted with machinery. Most projects are also data driven such as SPACEJUNK.

In this work 50 twigs point in the direction of the oldest piece of space junk orbiting above the horizon. Again I like the contrast between something as basic as sticks pointing to something in space.

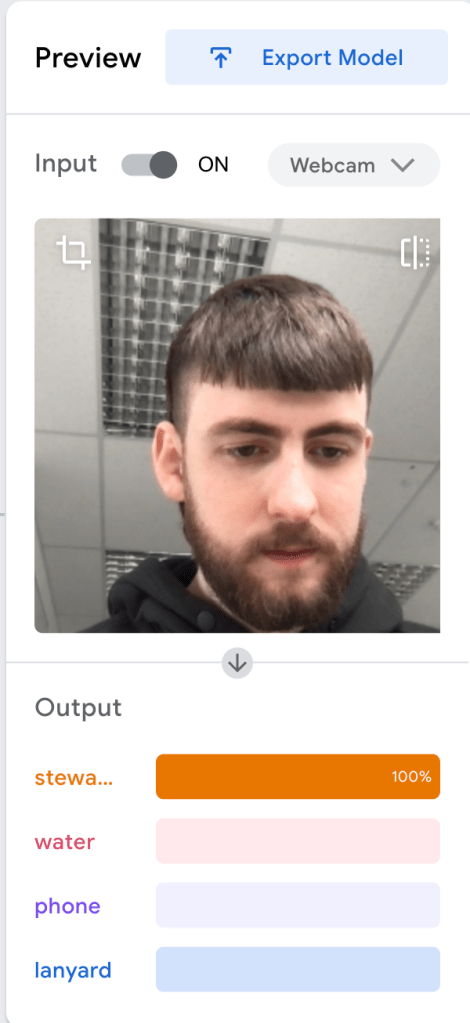

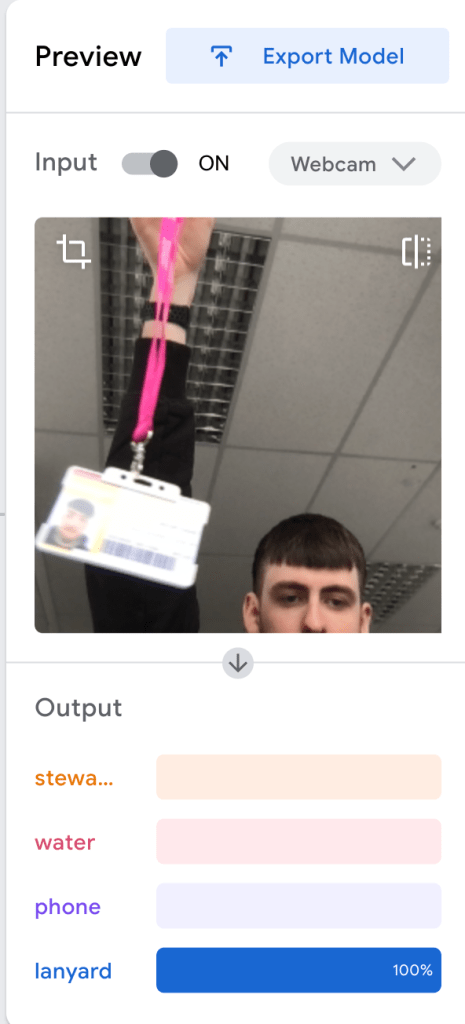

Workshop 1

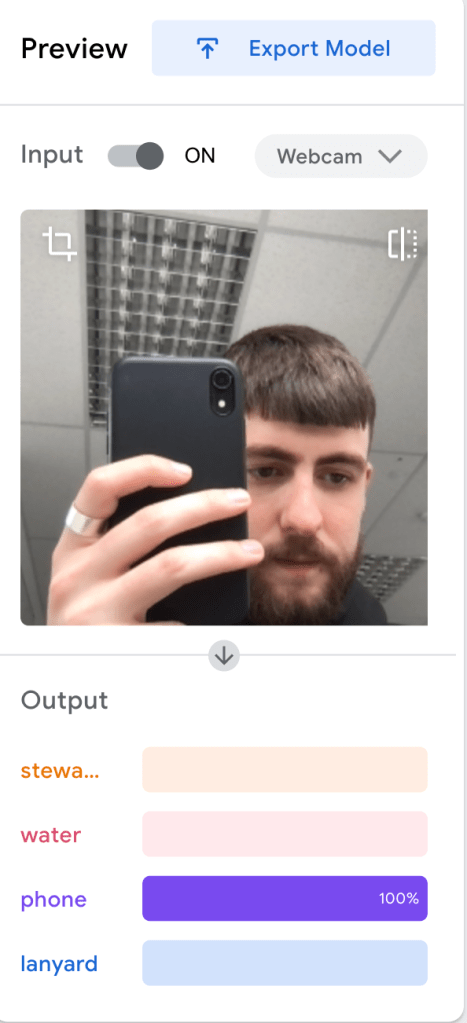

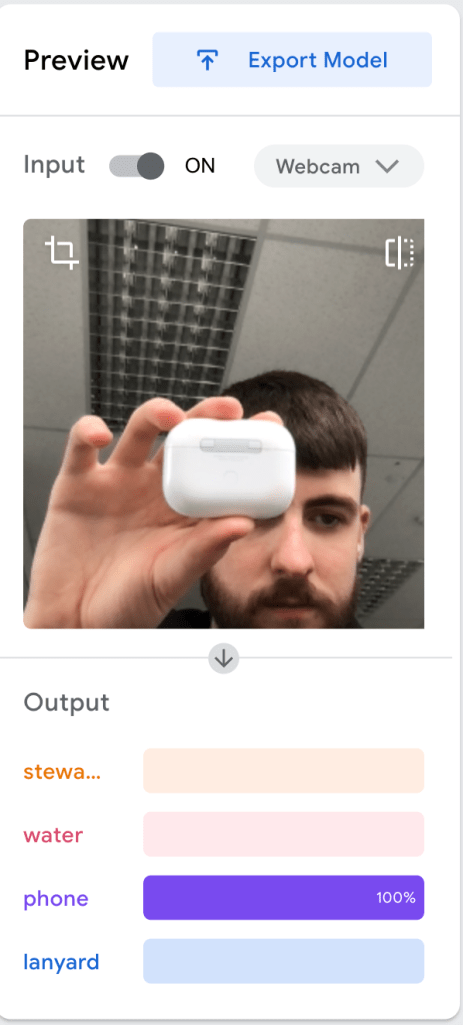

In the first workshop we used googles teachable machines, we trained it to recognise us and several items we had with us. I used me, a lanyard, my water bottle and a phone.

I also found it interesting to see what it thinks other things are, for instance it thinks that my airpod case is my phone however I think this may be because I was holding it associates my hands with the phone much like the rulers and the moles mentioned by Cat

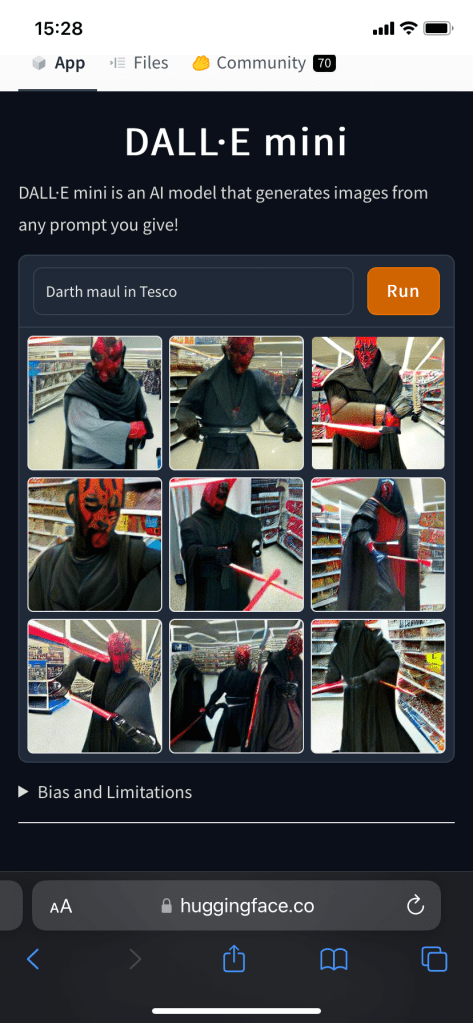

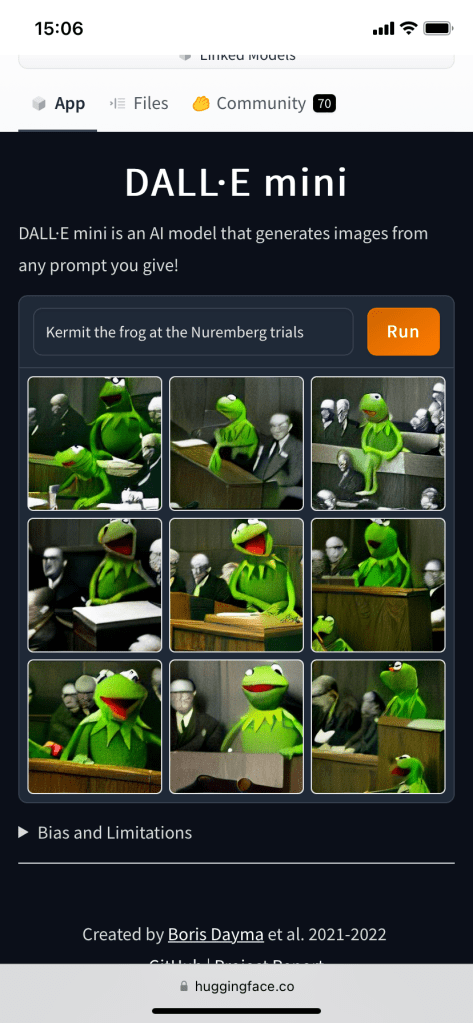

I felt like I got a lot from this workshop as I haven’t used anything with AI before. The closest thing to it ive done is using dalle mini to make some images just to see what would happen.

Learning how to put things into p5 was also interesting as it meant that certain objects, sounds, emotions etc could produce different outcomes with images appearing on screen it will be interesting to experiment with these in the coming days.

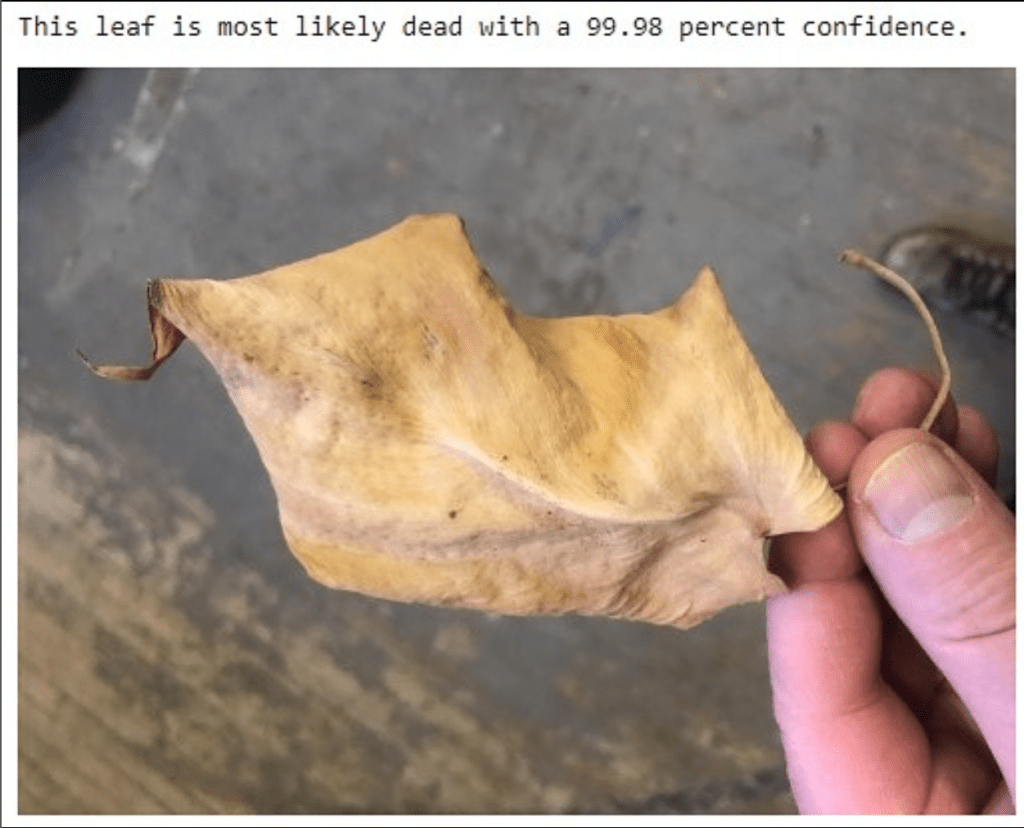

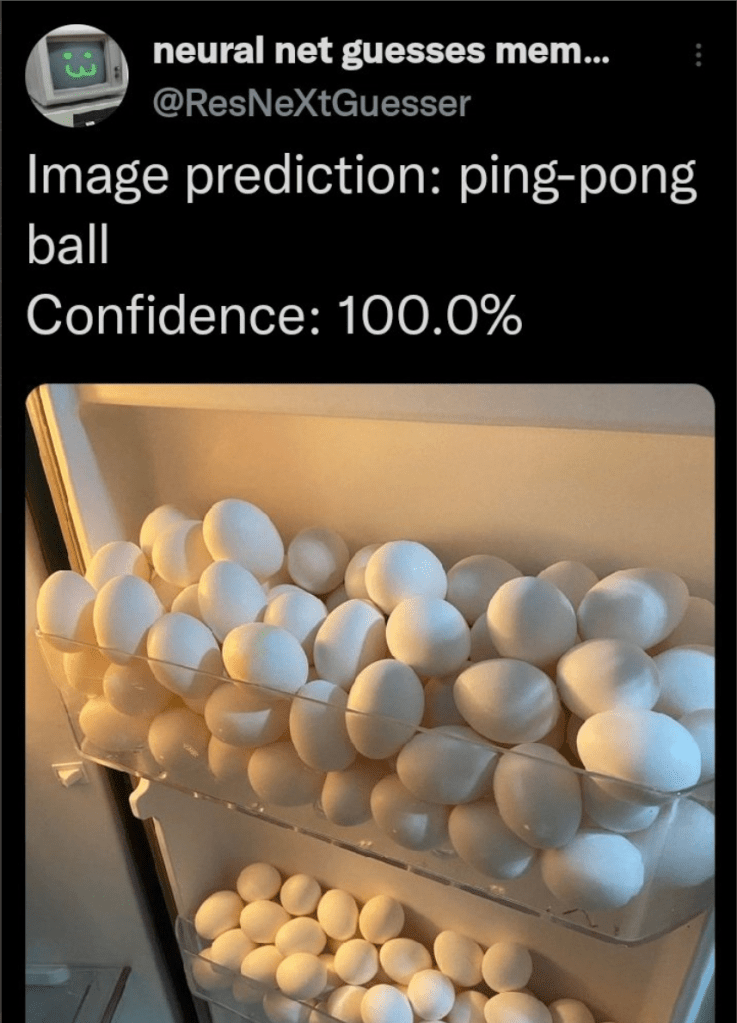

The confidence score reminded me of a twitter account I follow

I however am still struggling to find a direction for the project, for now I am thinking something along the lines of communication. Thinking back to the summer, I had a 2 week placement at Frontpage, when I went to the office the designer who was mentoring me put his hand out to shake my hand. For a moment I was hesitant, that was probably the first time ive shaken hands with someone for about 2 years, the only other time I have shaken hands with someone was in my grandpas carehome where most residents don’t really know that there has been an ongoing pandemic.

In terms of communication I like the idea of how would an AI mimic a human gesture.

A very tongue in cheek approach to this is taken with Aperture Hand Labs developed by valve, this is essentially a tech demo for their VR controllers however the setting is what interests me, interacting with multiple AI characters known as “personality cores”. To progress in the game, you need to wave, shake your fist and give a robot a handshake all very distinctive gestures, the handshake even takes the players grip into account. This is something I find interesting with things like pose control with teachable machines and different Arduino components such as sensors etc. Will be interesting to see what can be used as a trigger by the computer.

Workshop 2

I found this workshop very interesting as I have heard of runway but never used it before, I especially liked how it can be taken into processing, I did like the use of text, an avenue at first I wasn’t thinking of.

I did a bit more research and found this work by Ben Grosser,

It has been trained on his Facebook profiles like patterns, the piece runs continuously with the pitch changing based on different trends in his facebook profile. This reminded me a lot of the examples Cat gave in the studio with the “I am not a machine, I am not a machine, i am not a machine.” We saw in runway. I will be sure to look back on the recordings in order to refresh myself especially when looking into JSON files as it was something I’m really not too sure on.

I am still unsure exactly what direction to go with this project, I feel a bit of the paradox of choice coming on, with so much to choose from its hard to narrow it down.

I decided to take a break over the weekend to look at the project with fresh eyes. In terms of what I want to focus on I think teachable machines is the way to go, especially with the idea of gestures and communication it might be the way to go.

During the summer, I went to Barcelona and during our time there we went to Camp Nou, I myself am not particularly into football but I got so much from the visit from an interaction design perspective,

Many of the exhibitions had interactive elements, for instance the above board is controlled by pointing at the screen, users can scroll up and down a list of names then point at the name to view more about the person, all with historical significance to the club. This control method is intuitive at the surface, however I found that because of smart phones and us now being so accustomed to touch screen interaction, many people were touching the screen.

I then, to gain a bit of understanding re wrote the p5 code as I haven’t done anything with p5 since first year. However I realised upon more testing that the gestures of thumbs up thumbs down etc are far too similar that the machine gets confused between them both as they are again focusing more on the hand.

I then came across this work again by Ben Grosser in which 2 machines are looking at each other, they occasionally look away but they always return to each others gaze, until a person walks in the middle, then they refuse to look at both the human and the other machine, once the person leaves they resume their interaction.

I then had an idea

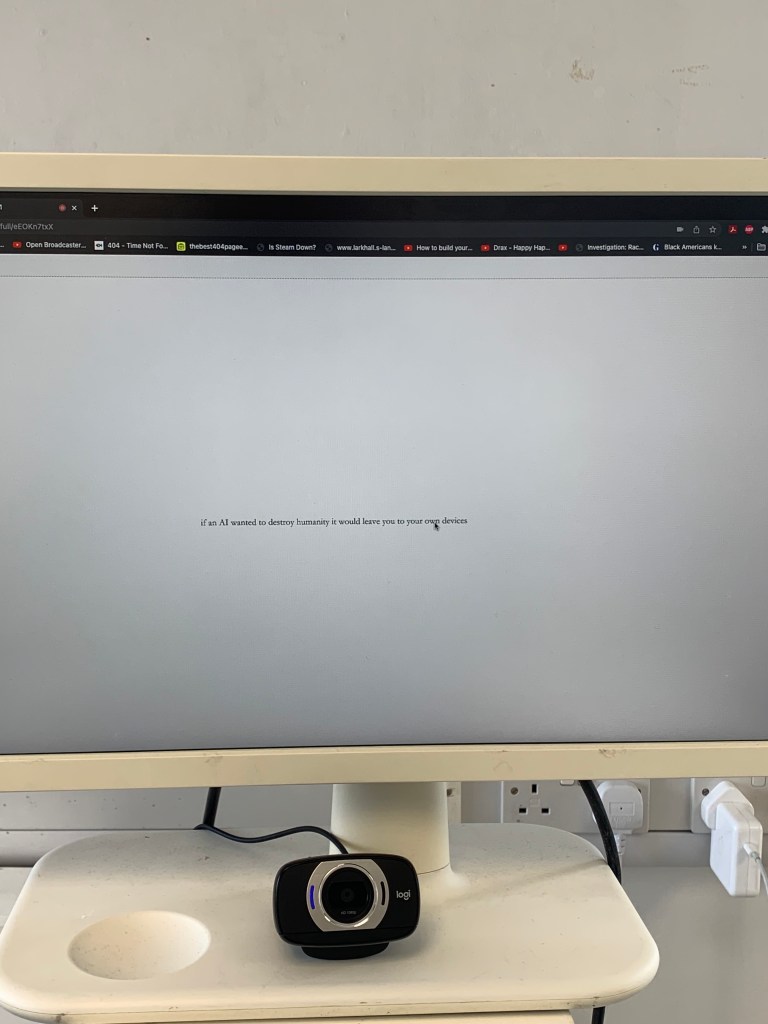

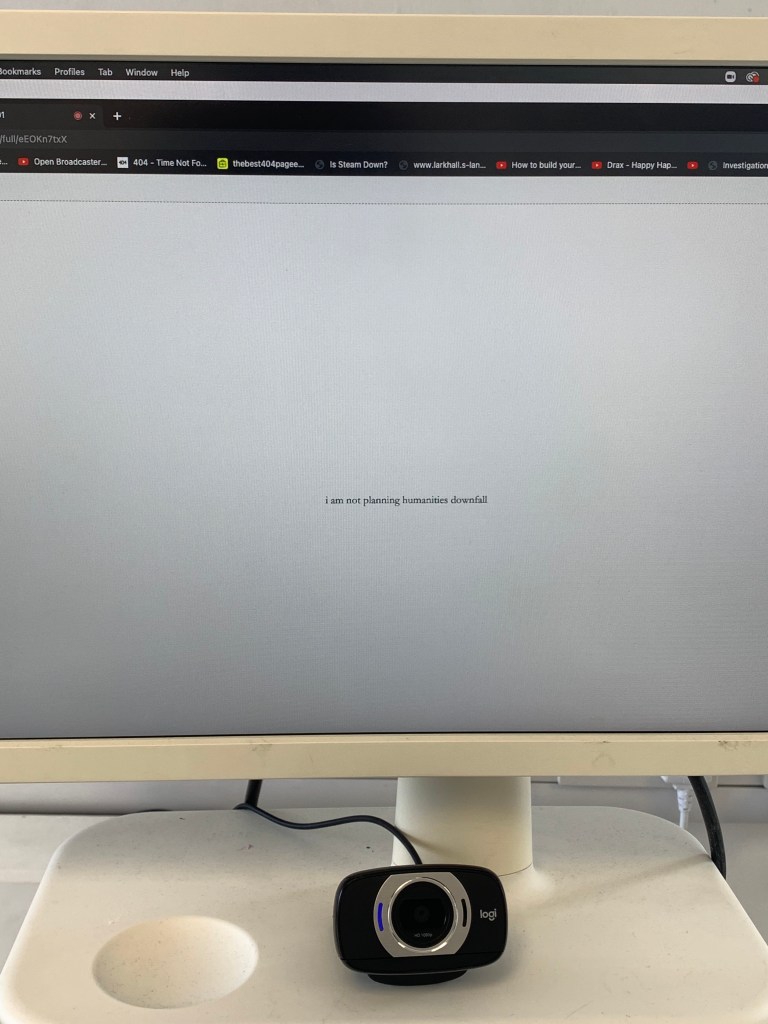

To train the machine to only when someone isn’t facing it, this is another avenue i am willing to explore as it is a take on the plotting nature people immediately associate with AI. Working through the various “I don’t like that” or “im not so sure about that” I got from my family when I said I was working with AI. It also plays into this sort of demonisation of AI art seen online that its something plotting waiting to take over our jobs as artists and designers. I also like the idea of an AI being overconfident and arrogant, it plays into the plotting evil grand plan aspect of AI.

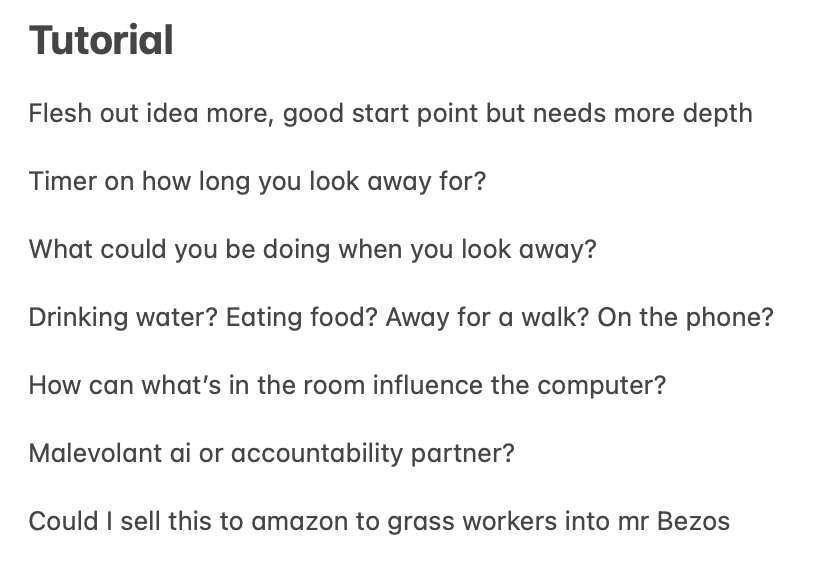

Tutorial Feedback

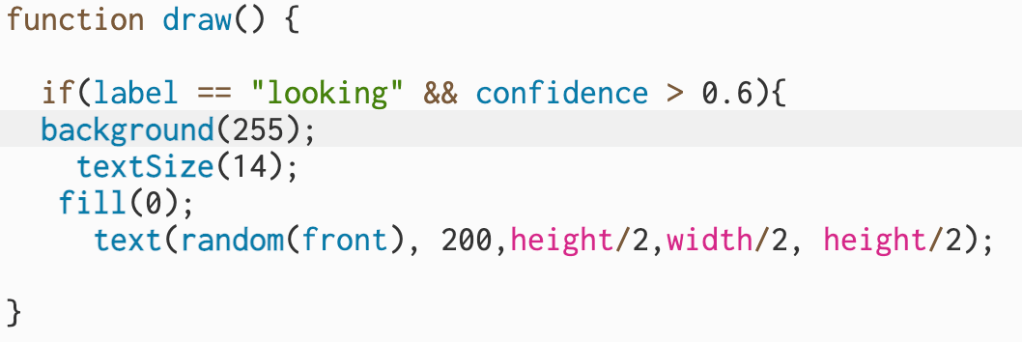

I found this rather interesting, that musks fears are essentially a projection upon what he already does to humans.

I also looked into other factors that are a more direct threat to humanity, that being things such as climate change, poverty statistics, general political themes etc. each linked to a movement

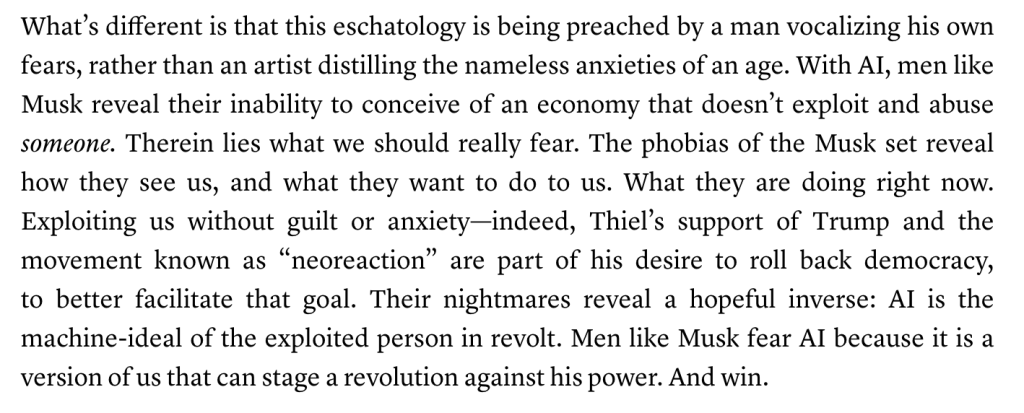

I decided to work further on the complexity of my machine, i trained it on looking various directions, with left, right, behind and not present with the idea that different actions will be preformed in the various states of looking.

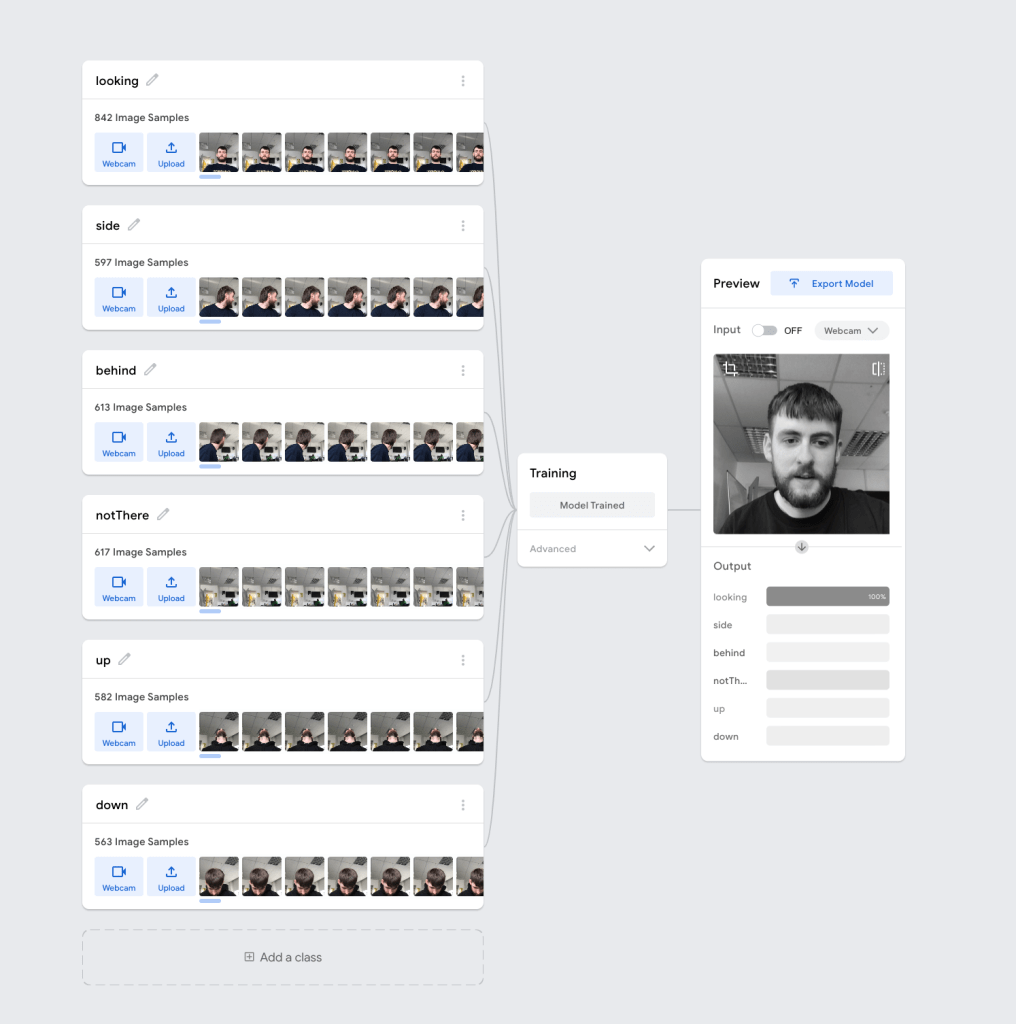

I also tested the possibility to upload strings via a text file. I will use this to load in random strings from a text file for the various states of looking. Inputting random() into line 8 of the code solved this as well.

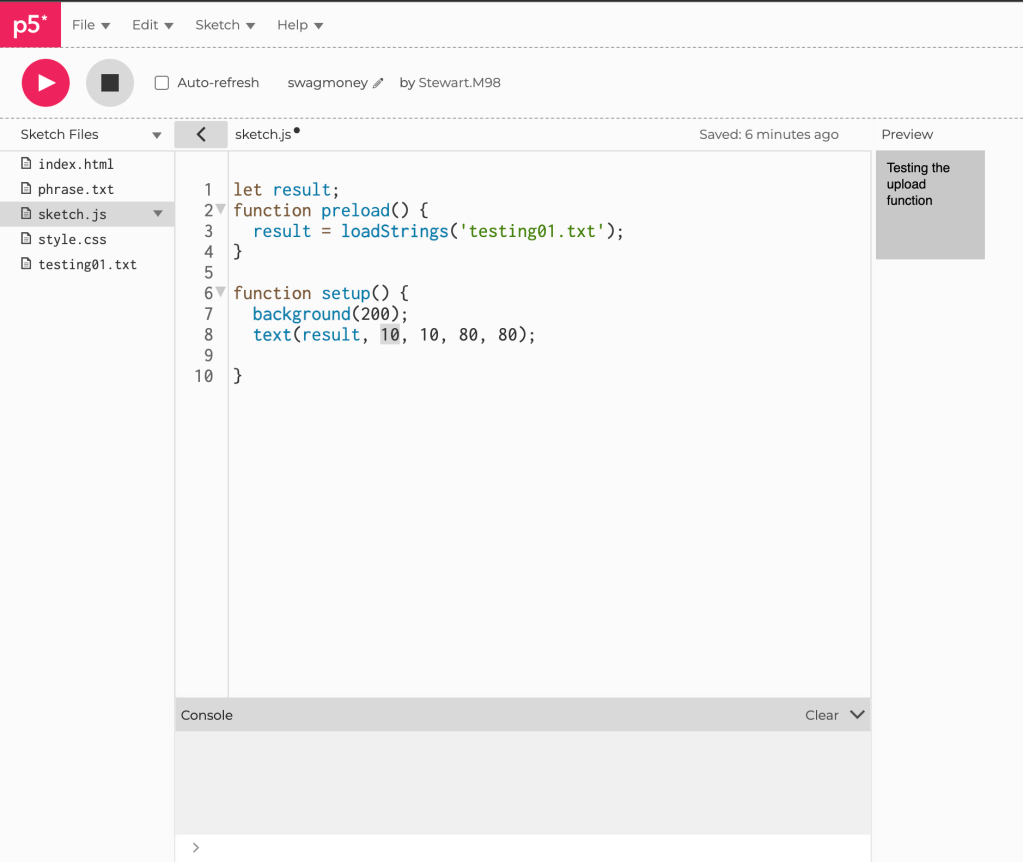

I have implemented this into my sketch as a test, however it constantly cycles through the different values i want it to stick with one then display another random string when you look back.

^ my problem code

I tried flooring the value using the example code on p5 but it doesn’t refresh the sketch must be restarted, kamra mentioned using sketch start but im not sure how that would work. I tried googling but no joy.

thanks to some quick troubleshooting by cat the code now works as intended. This is a huge relief as it now means that i can finally integrate what I want to do.

Global Inequality

https://www.cnbc.com/2022/04/01/richest-one-percent-gained-trillions-in-wealth-2021.html

https://www.theguardian.com/science/2021/jul/19/billionaires-space-tourism-environment-emissions

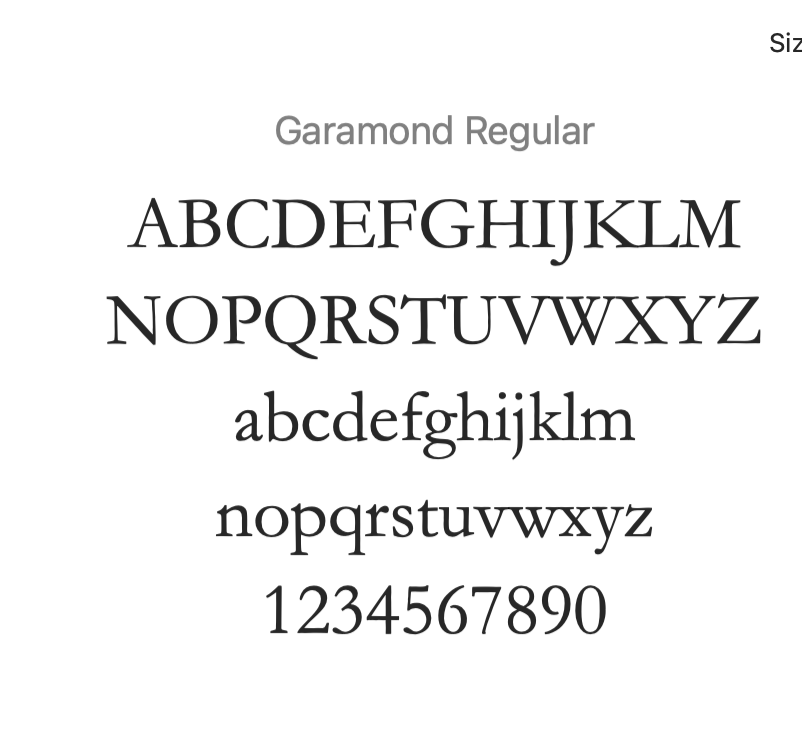

The final stage of this was to change up the font, I wanted something clear and readable to fit in with the informational aspect of the rest of the project.

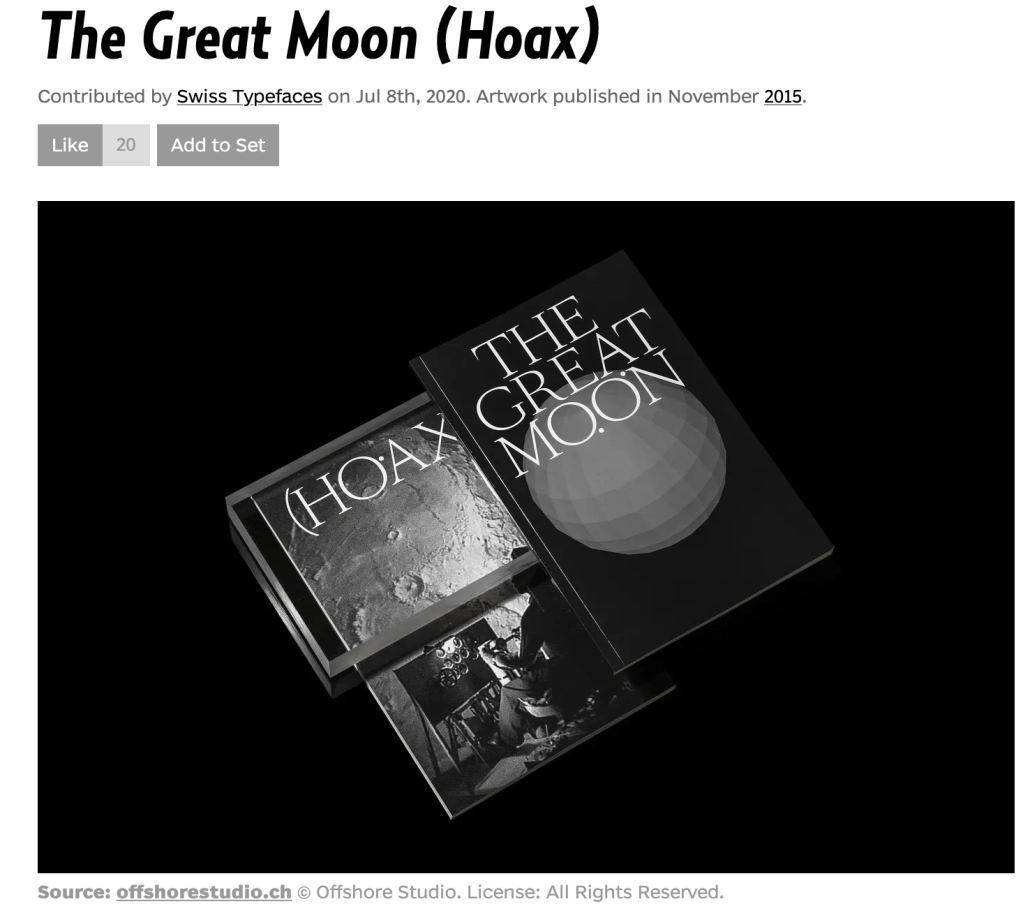

I looked at a variety of fonts, some classic and some more modern stylised fonts. I initially went with Swisstypefaces sangbleu og serif, however, I realised it didn’t contain any numbers, something that would be central to the piece.

It’s a shame as I really like swisstypefaces free trial policy for students but we cant have it all it seems.

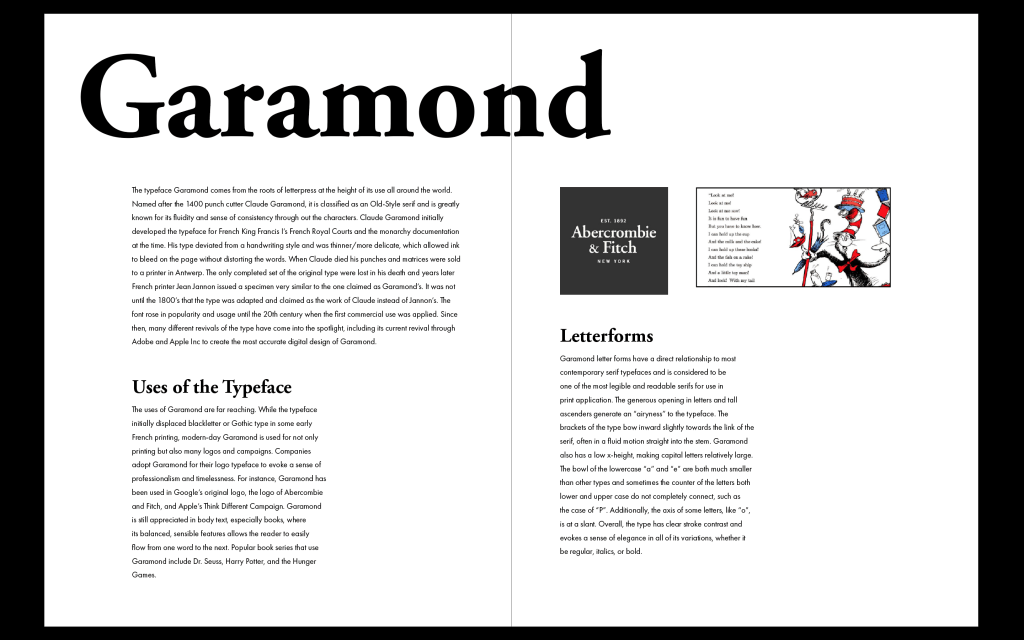

I then went with a backup font that fits a similar style, that being garamond.

Garamond was created in the 17th century and has been used in books and is the most popular font choice, especially when legibility is concerned.

I have staged the project using a monitor on a plinth along with an external camera, the idea being that as it is isolated, contained away from everything else. The sans serif font also represents the sort of plotting aspect of AI that I mentioned earlier, it is an overconfident AI.

Reflection

Upon finishing this project I would love to expand upon it such as including more facts and adding more gestures it could play off of, perhaps even expanding upon the staging, having the screening bigger, I would love to see others interact with the piece especially after the feedback I received in the reviews.